The Fight Against Bots

By Eliza Schuh

March 10, 2021

Since the advent of computers, we have been forced to confront the question of humanity. What distinguishes a human, and how does one recognize and deter non-humans that simulate those distinguishing factors? Today, bots make those issues increasingly prescient. According to Imperva’s annual Bad Bot Report, bots consisted of 37% of all internet traffic in 2020, with 24% of all internet traffic coming from “bad” bots with malicious intent. With the prevalence of bots, companies need ways to distinguish them from human users. However, some bot detection methods are highly invasive and present privacy concerns that can be avoided by using humanID for bot protection.

There are good bots and bad bots. Good bots are owned by legitimate companies, used mainly to automate repetitive tasks–chatbots like Apple’s Siri, search engine-owned web spiders which analyze and index websites, or copyright bots, which check the web for copyright infringement. Good bots can be useful, but bad bots can have devastating and far-reaching effects. They can cause denial-of-service (DDoS) attacks (making users unable to access the affected service), spread fake or misleading news with frightening speed, and even impersonate users. Each DDoS attack costs the targeted company an average of over $740,000, and misinformation pushed by Russian bots during the 2016 election is widely and credibly documented to have interfered in the 2016 US Presidential election. Despite their widespread use and the security risk they pose, bots are often misunderstood. Bots vary wildly in complexity, organized into increasingly complex and difficult-to-detect generations.

To better understand the process of authenticating bots from humans, we should look at humanity’s first proposed artificial intelligence test. The Turing test was first proposed in 1950 by Alan Turing, who is widely regarded as the father of modern computer science. To pass, a computer must be able to engage a (remotely-located) human in conversation, convincing them that it is another human. The Turing Test was devised as a test for the complexity of artificial intelligence, but it implies that most computers could not imitate a human convincingly enough in conversation, therefore proving that the computer is not human. With a few notable exceptions, most computers (and bots) still cannot genuinely pass the Turing test, which requires an ability to think for itself. But obviously, companies do not have time or the ability to try to engage every user in conversation to discover whether they’re human. Even if they did, the Turing test is far from perfect. Since it is incredibly difficult to distinguish between original thought and sophisticated parroting, many bots would likely pass with a false negative.

It is generally accepted that there are four generations of bots, with first generation bots being the least advanced and fourth generation being the most. First and second generation bots are fairly easy to identify, as first generation bots cannot maintain cookies or use Javascript (therefore failing Javascript tests) and second generation bots have recognizable and consistent JavaScript patterns. Third and fourth generation bots are much more difficult to detect. In this article, we will focus on third and fourth generation bots because they are more difficult to detect and can more convincingly impersonate human users. Third and fourth generation bots can imitate browsers, perform nonlinear mouse movements, and can execute slow DDoS attacks. More specifically, third generation bots require challenge tests, fingerprinting, and behavioral analysis (since human actions still display an additional degree of randomness). Fourth generation bots usually utilize machine learning and more advanced AI. They leverage headless browsers, which are browsers without a graphical interface, and mimic human randomness closely. Sophisticated AI is usually necessary to detect these bots.

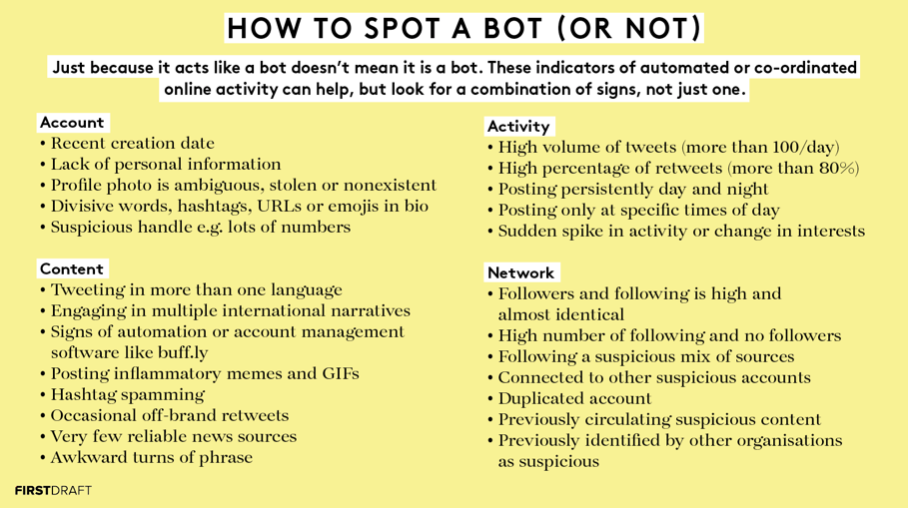

Even sophisticated bots often exemplify common behaviors, which can be used to flag them. First Draft News, a project by the Google News Lab to fight disinformation, uses a table to describe bot activity (particularly on Twitter), separated into four categories: Account, Activity, Content, and Network. Examples include recent account creation date and posting at consistent times of day.

When trying to determine if a user is a bot, it is important to consider the possibility of both false negatives (a bot slips past) and false positives (a human is flagged as a bot). False positives occur when a human portrays bot-like activity. Obviously, not all multilingual Twitter users who post inflammatory memes and recently created their accounts will be bots, so more information is often needed. The higher the number of these red flags met by a user, however, the more likely the user will be a bot.

CAPTCHA and reCAPTCHA are also common bot-detection services. CAPTCHA stands for Completely Automated Public Turing Test To Tell Computers and Humans Apart. CAPTCHAs often use tasks like making a user read distorted words, identify items in segmented and blurry pictures, a check box, or pass an audio test intended for blind users. Less sophisticated bots have a hard time identifying distorted letters and will usually input random characters and thus be statistically unlikely to pass. However, more sophisticated bots, most notably fourth generation bots, are able to pass these tests with the use of machine learning. reCAPTCHA check boxes do not test the actual act of clicking the box, but the mouse movements leading up to the click.

Some reCAPTCHA tests do not require user input at all, instead holistically assessing the cookies stored on a user’s browser and their internet history and raising privacy concerns. Google owns reCAPTCHA, and while they have claimed not to use reCAPTCHA data for ad targeting purposes, that is not necessarily true and, at minimum, reCAPTCHA alerts Google as to what sites a person visits.

Bots run rampant on social media, constituting an estimated 5% of all Facebook accounts and 15% of all twitter accounts. Bots can spread fake news at an alarming rate. A bot account posts misinformation, then other bots swarm to like it in hopes of elevating the post and making it seem more legitimate to the human observer. In a pandemic, this is especially alarming. A Carnegie Mellon study in May 2020 estimated that 45% of tweets about COVID-19 were bot-generated, and a plurality were conspiratorial. In March 2020, Reuters reported that an EU document alleged that Russian had begun a massive bot campaign, targeting the US and Europe with disinformation regarding the virus. In this way, bot prevention is prevention of fake news and the safeguarding of truth.

Bot detection is becoming increasingly important and increasingly difficult as bots become more sophisticated, and the fight against bad bots is directly related to the fight against fake news. Our current methods of bot protection often rely on a willingness to trade privacy for security, but we at humanID are convinced that does not need to be the case.