What is Data Scraping and is it Illegal?

By Christopher Fryzel

May 5, 2021

What are Data and Web Scraping?

Generally, data scraping refers to the extraction of data from a computer program with another program. Data scraping is commonly manifested in web scraping, the process of using an application to extract valuable information from a website. This process is automated in its collection of web data, and it requires the Internet to function. There are generally two parts to web scraping: the crawler and the scraper. The crawler portion is an algorithm that indexes internet content, and the scraper extracts data from specific websites.

How do Web Scrapers Work?

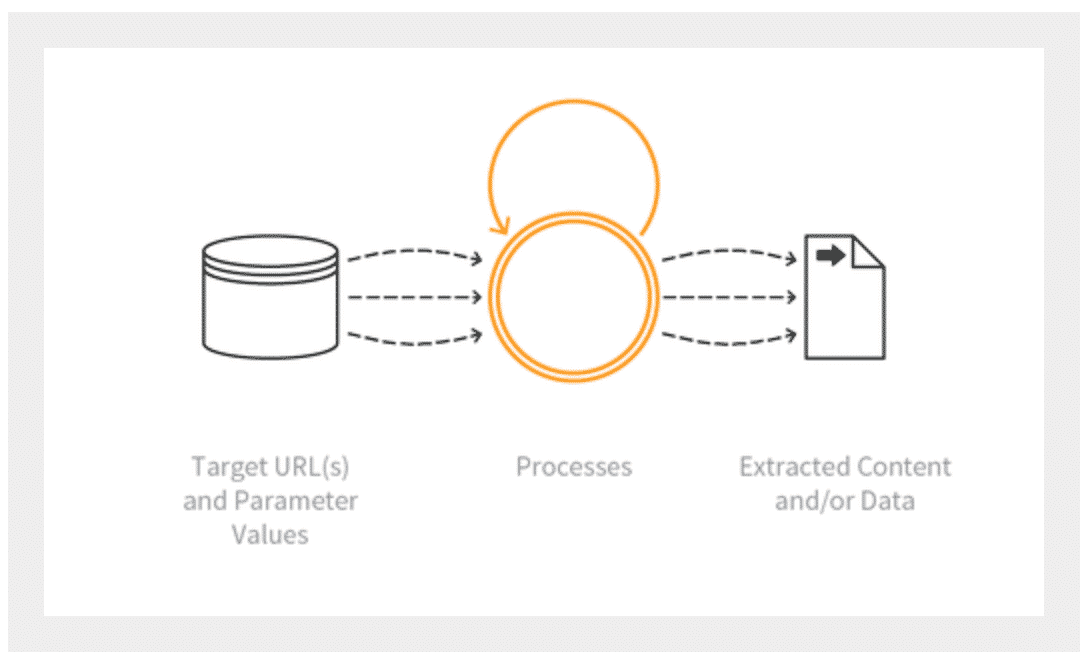

Web scrapers are made to extract information that is specified by its designer or all the data from a site. Logically, requiring all data to be extracted results in slower scraping. When a web scraper is available to operate, it needs a URL, which is provided by the crawler. The scraper then downloads the HTML code from the specified websites and obtains the required data from the code. The program then outputs the newly acquired data to a file of the user’s choosing.

What is the Purpose of Scraping?

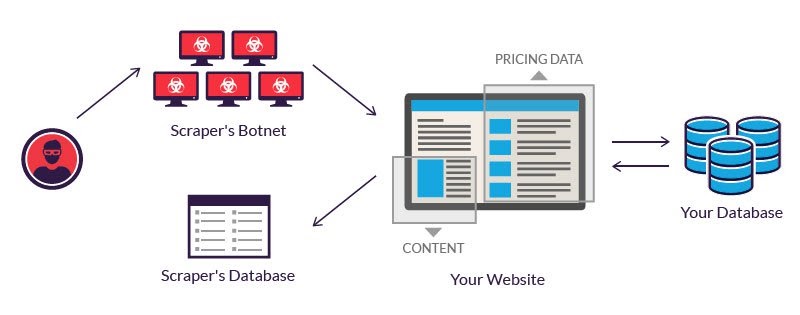

A web application generally doesn’t expose all its data through a consumable API, but a scraper bot can collect the website data anyways. Web scraping occurs by getting the HTML document from the website, then parsing it for a specific data pattern, and finally converting the scraped data into the format that the author of the program specified. The most common objectives for scraper bots include content scraping, price scraping, and contact scraping. Content scraping includes gathering and repurposing data from a site; for example, a competitor of TripAdvisor could scrape all the review content from TripAdvisor and reproduce it on their site. Meanwhile, price scraping aggregates price data, which may allow the scraper to gain a competitive edge. For example, a competitor of Amazon may want to scrape their pricing data every day to track price changes for certain products. Contact scraping allows scrapers to gather contact information to sell, create bulk mailing lists, robocalls, or attempt social engineering; scammers and spammers utilize this scraping method to find new victims. Some rather benign use cases for web scraping are news monitoring, lead generation, and marketing research

Is Data Scraping Illegal?

Any data that is publicly available and is not copyrighted is fair game to be scraped. However, commercial use of scraped data is limited; for instance, video titles on YouTube could be legally scraped, but videos themselves could not be scraped and reused since they are copyrighted material. Scraping data from sites that require authentication or sites that explicitly prohibit scraping in their terms of service (ToS) is also illegal. For example, logging into a site such as Instagram and then scraping user data would not be permitted. Scraping that violates the Computer Fraud and Abuse Act (CFAA), trespass to chattel laws, or Robots.txt rules are also unlawful. Unfortunately, large companies have suffered large data leaks because of illegal scraping. The risks of scraping are especially apparent with the recently uncovered Facebook breach where 533 million users had their data leaked online. Still, corporations with publicly accessible data are not allowed to completely block scraping, as it can be considered “malicious interference with a contract,” which is against the law in the United States. However, it is possible to slow down scraping, which is essential to ensure user protection.

How can Scraping be Mitigated?

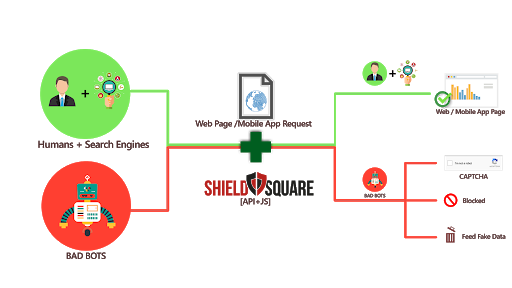

Although scraping can not be prevented in its entirety, slowing it down is possible. Companies should consider a technique such as “rate-throttling” which prevents too many pages from being downloaded at once. Other technologies might include CAPTCHA, to test whether a human or a web scraper is trying to request the page. Additional preventative measures include changing the pattern of the HTML file, making the code less readable through obfuscation, and providing fake information. This includes using cloaking, which returns an altered page when a bot requests a real page. However, this method is frowned upon by search engines such as Google, and websites that use this technique risk being removed from their index. Ultimately, these techniques deter web scraping, making it less cost-effective and slower.

Even then, the most effective technique is to simply not post private information on your website, as it is then impossible for scrapers to steal your information. This is imperative because search engines are the biggest scrapers in the world; they use the technique to index content for billions of searches per day. Also, steps are taken to limit scrapers from accessing public information that harms the average user’s experience by impeding fast and easy access to your website. Additionally, companies should look to options such as humanID to further improve user security and privacy, as well as potentially using humanID in place of CAPTCHA.

All in all, web and data scraping are often not illegal by themselves, but misuse of these methods can be against the law. Unfortunately, it can also be unlawful to block scraping completely. A powerful scraping algorithm will find a way to bypass other prevention methods such as throttling for all available data. Therefore, to best protect a website from scrapers, companies should simply refrain from posting private information on public websites or valuable metadata.